One in a Million Is Probably Fine, Right?

Ship it!

Over the past few decades, the act of logging in to a website has seen many shifts — most of them net positives. Passwords alone didn’t cut it, so in comes two-factor authentication. Two-factor authentication is annoying as hell, so say hello to passkeys! Etc.

Quick question, though: what the hell?

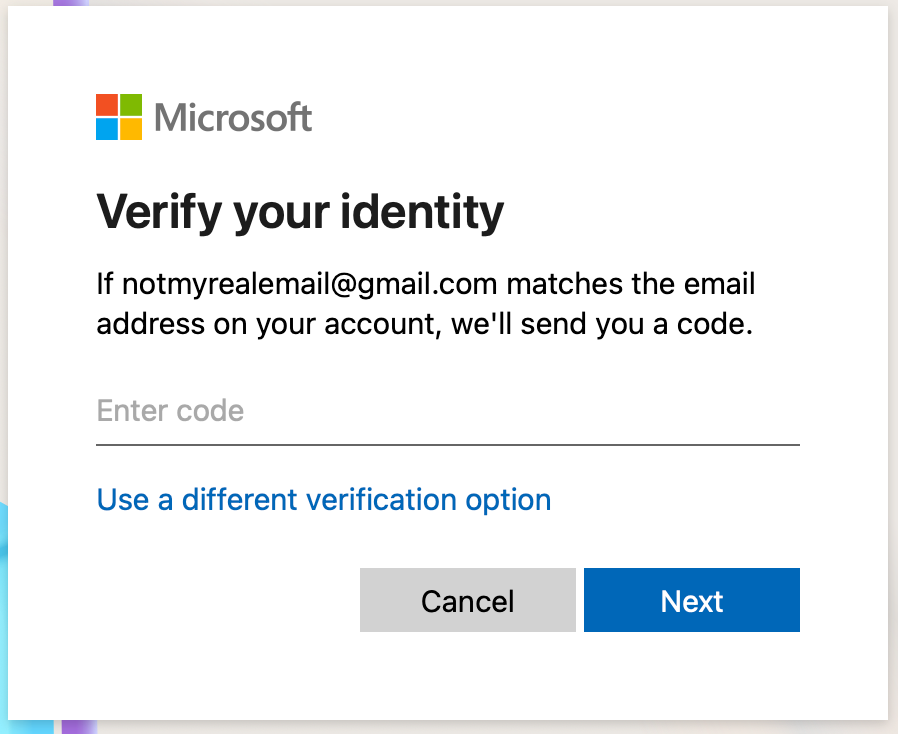

Pictured above is what I see1 when I try to recover access to my Microsoft account. How helpful, Microsoft! A simple six-digit code is all I need to reset the password for “my” account. Thank you!

Ah, but wait… what if (crazy idea here) someone (never been done before) were to (impossible) just put in a random number (technology doesn’t exist probably) and see if it works? What if someone were to hit that one-in-a-million jackpot? What treasures might they unearth? Confidential emails; Azure accounts; OneDrive; BitLocker recovery codes; all this and more would be theirs.

The math is incredibly straightforward: a six digit code, with 10 possibilities for each digit, is 1,000,000 possible combinations. Wow, that’s a lot! It sure is, buddy. Go tell a cryptographer that you’ve created a password hashing scheme with “only” a 1-in-a-million chance of a collision. See how many accolades you get.

At the very least, could you please have the decency to increase the problem space? I know we don’t see value in STEM majors taking humanities courses2 and thus barely understand the mysterious technology known as letters, but check it: these rambunctious little dudes can be used for more than LLM prompts, if you can even believe it. No, they have another purpose, locked away in the annals of history until now: radically increasing the possible combinations of an N-digit code.

Even removing the characters which are oft-confused for one another3, you could increase the possible combinations from 1,000,000 to 887,503,681. For free! Costs nothin’! All this without even daring to ask the forbidden question: maybe we should use more than six digits?

Would you like me to reveal how I know that you — yes, you, the engineer who implemented this system in your own app — know that this is unacceptable?

I know it because I’ve seen you get it right when you weren’t being watched.

When I log into Substack, they send me an email with a 6-digit code. Reprehensible! But helpfully, alongside the code, there’s a friendly clickable link, quivering with anticipation that I might give it a cheeky bap with this little thumb of mine.

You know what that link contains? Often, it’s not a six-digit code! Instead — depending on the app — it’s either:

A very long, randomly-generated ID which is correlated with some database entry

The possible combinations of this ID are so intuitively numerous as to be unworthy of further discussion. Big number! The biggest.

A JWT, or something like it4

Signed by asymmetric (or, less commonly, symmetric) cryptography which we cannot currently break within many human lifetimes.

This is what Substack uses

And why? Why do you do this? If the six-digit code is all you need, why not just put it into the link? Why go to the trouble of making the link so secure; so protected against malicious actors and forgery? It’s just a simple alternative to typing in the code, right?

You made the link actually secure because you knew the product manager pushing you to build this whole feature wasn’t going to complain about user metrics, because the link is equally clickable regardless of how secure it is. You saw an avenue to, at the very least, do one thing in a way which doesn’t throw caution to the wind. I’m sure they’ll force you to ruin even this; just give ‘em time.

The people who made the decision to secure critical accounts5 behind a “guess the number I’m thinking of” game either do not know or are disincentivized from caring about what they are doing. They are product managers or “product-minded” engineers who are given no space to prioritize anything but metrics; driven solely by an insatiable demand from above their station to make comfy numbers bigger and bad vibes numbers smaller6. And then Google rolls it out and Microsoft rolls it out and now it’s an industry standard you see! and well-meaning, competent people who absolutely should know better are convinced that this is somehow acceptable and they should copy it because, well, if it’s good enough for Microsoft…

Here’s a bold claim: you actually owe things to your users. When you choose to store their data or host their account, you are entering into a sacred commitment to do right by them and to be a responsible steward of the trust they have placed in you. Sometimes, that means doing something which is slightly more difficult but ultimately in your and your users’ best interests. You may think this sounds corny, and to that I say: I don’t care. The responsibility exists whether you choose to honor it or to mock it.

Oh, but we’ll add rate limiting and max retry counts and uhhhh, hold on guys, ignore that email you just received from me; I think my account was hacked. Crazy. Anyways, a limit of 10 tries is probably fine, right? No, it’s not fine. Botnets exist. Attacks at scale exist. Criminals know how to write code, too.

In isolation, it may seem strange how angry this makes me. I’ve never had an account stolen due to the flaw I’m claiming here. There’s no huge epidemic of account takeovers in this manner. What’s the big deal?

You have to consider this against the backdrop of this increasingly-irresponsible industry within which I make my living. Users’ identity documents are stored, unencrypted and with no auto-deletion date, in publicly-accessible7 S3 buckets. Everything is logged, and every employee can often access those logs. Data is never deleted, only marked inactive. “At-rest encryption”8 is marketed as “industry-standard military-grade security”, proving only that the industry’s standards are laughable at best and maliciously garbage at worst. User convenience9 is prioritized over security 99 times out of 100.

Enough is very, very much more than enough at this stage. We have reached unthinkable levels of enough-being-enough, and my goodness, they just keep making it more enough. Enough being enough is never enough for this enough-enoughing industry.

Stallman was right about pretty much everything except for toe jam’s status as food.

On The Other Hand…

There have been some pretty crazy claims about this authentication mechanism — for instance, one blogger wrote:

Oh, but we’ll add rate limiting and max retry counts and uhhhh, hold on guys, ignore that email you just received from me; I think my account was hacked. Crazy. Anyways, a limit of 10 tries is probably fine, right? No, it’s not fine.

- An ideologue delivering a polemic

Okay, maybe it’s at least a little fine.

I’m sure that Microsoft employs a suite of sophisticated risk analyses, heuristics, adaptive rate limiting, attack monitoring, etc. I’m sure they had very smart engineers run the numbers and determine that they could design the system such that account takeovers were at an acceptably low level. I’m confident that, if a knowledgeable engineer at Microsoft were to read this blog post, they would be able to throw out a litany of backend systems which have been carefully designed to mitigate the exact concerns I’m describing.

I’m just also sure10 that the 6-numeric-digit specification was a target set by product managers trying to reduce friction such that they can prove that they’re more valuable than other product managers, and that the engineers who designed the system argued (assuming a platform to do so was given) in favor of increasing the number of digits or the size of the character set. And I’m reasonably confident that the mitigations (band-aids) put in place to improve the security of the system have a lower effort-to-impact ratio than just adding a digit or two.

Look: it’s incredibly difficult to trust that major institutions, in 2025, are reaching decisions through a process of results-oriented, rational inquiry with a focus on getting the right people in the room and doing right by their legal and moral responsibilities to their users. I mean, come on: doesn’t that sentence just make you want to throw up in your mouth, even a tiny bit? Not because acting in such a way is bad; not because it’s impossible; but because it’s insufferably naive11 to expect it to take place in modern corporate America. And to hold a corporation morally responsible for operating in such a way? What are you, twelve years old?

The modus operandi these days for those trapped inside large modern software companies seems to go like this12:

Identify a metric you would like to improve (likely because you’ve been told you must by someone who has power over you)

Figure out a way to improve said metric

When concerns or pushback are raised by your peers or subordinates:

If the concern can be addressed without meaningfully stopping you from A) improving the metric or B) doing so as fast as possible, address it!

If the concern cannot be addressed without harming A or B above, get rid of the concern with that corporate manipulation you’ve been forced to become so good at.13

Get laid off and replaced by AI which doesn’t work

This is not exactly a recipe for joyful, innovative, ethical creation, is it?

So I don’t know. Maybe it’s all fine. It’s probably very far from the biggest software security threat which impacts users today. But despite the hedging and the uncertainty… it’s clearly not a system which was designed by any process I would describe as joyful; principled; user-focused. And that’s never going to be acceptable to me.

More accurately, it’s what the criminals who have been regularly trying to get into my account on a continuous basis see.

To be clear, my respect for college as a mechanism for producing competent, well-rounded, learned individuals is infinitesimal in 2025. I also did not go to college, which clearly makes me an authority on the subject.

1, I, l; 0, o

For the uninitiated, a JWT is just a piece of data which has been signed. Signing data allows you to mathematically prove that said piece of data was signed by you and hasn’t been modified or tampered with in any way.

Read: betray their users’ trust completely

This is structurally-enforced incompetence as opposed to individual incompetence. An incredibly intelligent person in such circumstances is necessarily going to experience pressures and incentives and disincentives which inevitably lead to the same outcome: we need to make the product shittier and less secure because look at this graph!

Honestly, it doesn’t even matter if they’re publicly accessible, given how utterly terrible most companies seem to be at keeping their “internal” systems from being hacked.

Stop storing data that will be used to destroy people if (when) you get hacked. Where is your sense of goddamn responsibility?

99% of the time, “at-rest encryption” just means “AWS encrypts the data on the physical disk”. This protects against somebody physically stealing said disk, which is a legitimate attack vector but is not the primary concern with data in the cloud.

Data which is “at-rest encrypted” is still fully readable by the organization which holds it and the cloud provider which hosts the organization’s infrastructure. It is nothing. A paper-thin sheet of aluminum foil masquerading as an impenetrable steel wall.

Not as a good unto itself but as a correlation with increased usage and, therefore, increased profits.

Source: It would be very helpful for my argument if this were true

ï

Source: A lifetime of experience as both a consumer and engineer with engineer friends

And why wouldn’t you? This is your job — that is to say: your survival. Increased risk of security lapses vs. the ability to feed your son… would a bookie even facilitate that bet?

Better odds than the lottery right?